We tell you all you need to know

As the newer technology, HDMI offers improvements upon the older VGA cable in every respect. HDMI stands superior, be it the rate of transmission, display rate, video resolution, or even the nature of the signal used.

That being said, VGA is far from extinct. While the older interface is gradually being phased out, many devices still use VGA ports, making it important to understand its strengths and limitations. So here is a primer on the differences and features of the VGA and HDMI interfaces.

Introduction to VGA and HDMI

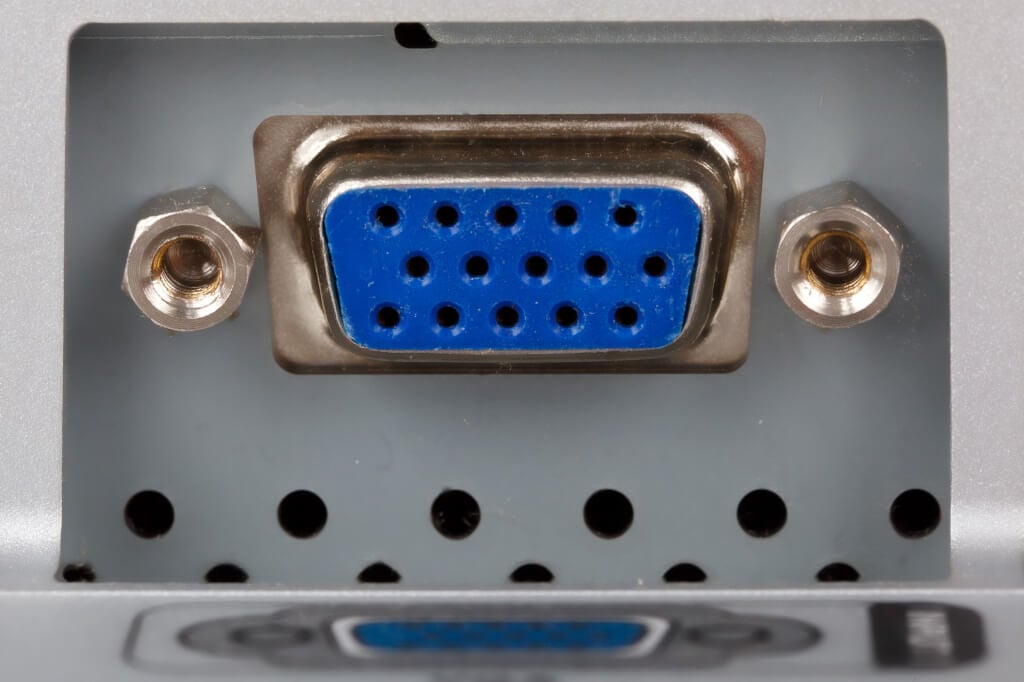

VGA (Video Graphics Array) is a display interface designed by IBM for computer monitors back in 1987. The 15-pin 3-row VGA port became a ubiquitous feature in PC motherboards, gaming consoles, and DVD players.

As the name suggests, the VGA interface only carries visual information, and that too at a resolution considered low by today’s standards. Yet its easy compatibility and widespread manufacturer support meant the interface continued to be developed until 2010 when the industry finally made the switch to the HDMI standard.

HDMI (High Definition Multimedia Interface) was introduced in 2002 to carry both audio and visual data with a single cable, that too at a much-improved resolution and framerate. Over the next several years, it quickly became the de facto standard for multimedia connectivity.

The soon-to-be-launched HDTVs swiftly integrated this technology, using HDMI connectors as a unified audio-visual interface. And as HDMI was backward compatible with DVI (Digital Visual Interface), most modern devices could make use of it.

This leaves VGA users in the lurch, however, as you need a specialized adapter to convert VGA to HDMI signals, and even then performance can be spotty. This is why even gaming consoles and streaming devices have also migrated to the newer technology, joining computers in ditching the VGA port.

The Fundamental Difference: Analog vs Digital

The most obvious difference between the two interfaces is the type of signal used. VGA connections carry analog video signals, while HDMI is meant for digital transmission.

But what does that mean? Basically, analog signals contain a continuous gradient of information, while digital signals are made up of discrete values.

This makes analog transmissions easier to transmit, though not particularly efficient. Digital transmissions, on the other hand, can pack a lot of information and are less susceptible to interference.

HDMI: Full Multimedia Transmission

As an analog interface, VGA is only capable of transmitting one type of information at a time. This limits it to video transmission alone, that too at a lower level of image quality.

HDMI, on the other hand, can transmit any type of digital data. The interface has been developed to accommodate both video and audio signals, without compromising on the fidelity of either.

The same HDMI cable can transmit 32 channels of high-resolution Dolby Digital audio as well as a 1080p resolution video stream simultaneously. This has made HDMI the mainstay interface for high-performance applications like 4K gaming consoles and Blu-Ray players.

VGA: Simpler and Faster

Thanks to the complex nature of an HDMI data stream, the information needs to be decoded into usable signals before playback. This introduces a small input lag with any HDMI connection, no matter how powerful the system in question.

This problem is not present in VGA. Its analog signals can be rapidly translated into moving images on the screen, without any sort of post-processing or conversion. This low input lag is VGA’s only saving grace, as it loses to HDMI on every other metric.

Sadly, it is a quality that rarely comes into play in most applications. An input lag just introduces a slight delay in the actual playback, without affecting the playback quality in the slightest. Unless the content is extremely time sensitive, there is no discernible advantage in using VGA.

HDMI: Flexible and Stable

Anyone who has worked with old CRT monitors knows that you cannot just plug a VGA connector into a running device and expect it to work. You can do it in HDMI, though.

Called hot-plugging, this functionality allows HDMI displays to be switched on the fly, without having to restart the whole system generating the video feed. For many commercial applications, this ability is a lifesaver.

HDMI cables are also less prone to electromagnetic interference, because of their thick shielding and digital signals. This makes them a better choice than VGA for deploying around radiation sources.

HDMI: More Pixels That Refresh Faster

Not only can HDMI support higher resolutions (up to 4K) than VGA but it also supports much higher refresh rates, going up to 240 Hz. This advantage is no longer just theoretical, with high-end monitors and UHD TVs regularly offering these advanced specs.

The latest version of the standard, HDMI 2.1a, supports even 8K, along with advanced video standards like Dolby Vision and HDR10+.

In contrast, the latest version of VGA is only capable of a maximum resolution of 1600×1200, that too at the standard refresh rate of 60 Hz only. Apart from audio integration, this is another big reason why TV and computer display manufacturers are completely shifting to HDMI.

VGA vs HDMI: In a Nutshell

HDMI is clearly the better interface for transmitting any multimedia stream. It eliminates the need for an audio cable and offers improved resolutions and framerates. DisplayPort is the only other interface to offer similar features.

That is to be expected, however, considering that VGA is a much older technology. For its time, VGA was a surprisingly robust technology, transmitting video streams through simpler analog signals without any input lag.

But like older standards of any technological interface, the days of VGA are drawing to a close. In this age of 4K graphics and 120 Hz monitors, HDMI is an essential component of the entertainment ecosystem. VGA is only useful to interface with older devices like projectors.